AI has long been a buzzword – we started seeing it utilized in consumer space; in social media, e-commerce, and even in our music preference! In the past few years it has started to make its way through the enterprise space, especially in cyber security.

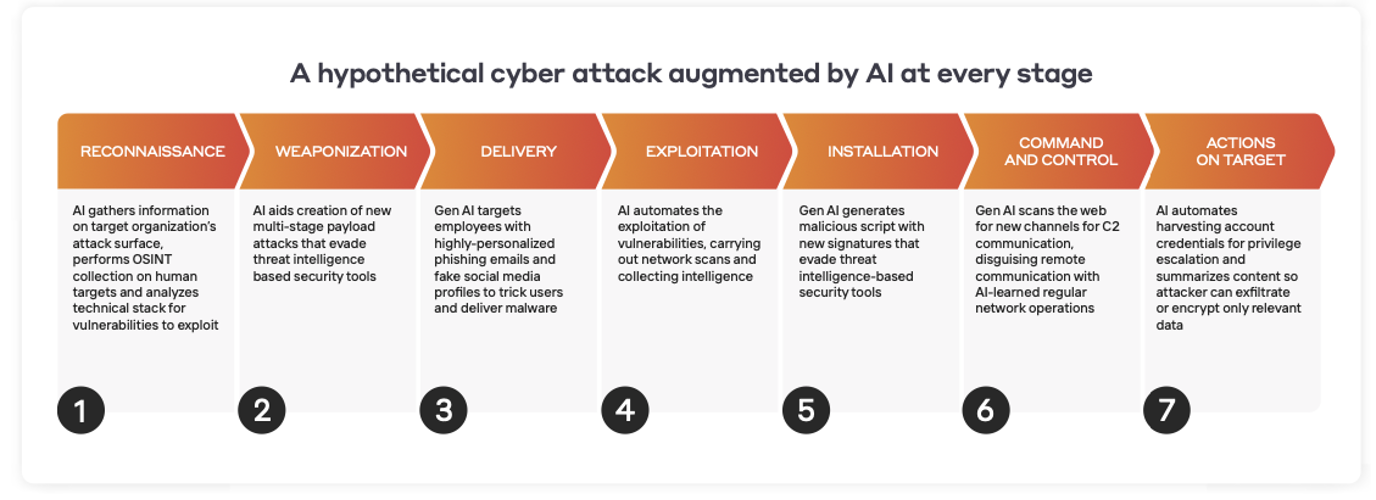

Increasingly, we see threat actors utilizing AI in their attack techniques. This is inevitable with the advancements in AI technology, the lower barrier to entry to the cyber security industry, and the continued profitability of being a threat actor. Surveying security decision makers across different industries like financial services and manufacturing, 77% of the respondents expect weaponized AI to lead to an increase in the scale and speed of attacks.

Defenders are also ramping up their use of AI in cyber security – with more than 80% of the respondents agreeing that organizations require advanced defenses to combat offensive AI – resulted in a ‘cyber arms race’ with adversaries and security teams in constant pursuit of the latest technological advancements.

The rules and signature approach is no longer sufficient in this evolving threat landscape. Because of this collective need, we will continue to see the push of AI innovations in this space as well. By 2025, cyber security technologies will account for 25% of the AI software market.

Despite the intrigue surrounding AI, many people have a limited understanding of how it truly works. The mystery of AI technology is what piques the interest of many cyber security practitioners. As an industry we also know that AI is necessary for advancement, but there is so much noise around AI and machine learning that some teams struggle to understand it. The paradox of choice leaves security teams more frustrated and confused by all the options presented to them.

Identifying True AI

You first need to define what you want the AI technology to solve. This might seem trivial, but many security teams often forget to come back to the fundamentals: what problem are you addressing? What are you trying to improve?

Not every process needs AI; some processes will simply need automation – these are the more straightforward parts of your business. More complex and bigger systems require AI. The crux is identifying these parts of your business, applying AI and being clear of what you are going to achieve with these AI technologies.

For example, when it comes to factory floor operations or tracking leave days of employees, businesses employ automation technologies, but when it comes to business decisions like PR strategies or new business exploration, AI is used to predict trends and help business owners make these decisions.

Similarly, in cyber security, when dealing with known threats such as known malicious malware and hosting sites, automation is great at keeping track of them; workflows and playbooks are also best assessed with automation tools. However, when it comes to unknown unknowns like zero-day attacks, insider threats, IoT threats and supply chain attacks, AI is needed to detect and respond these threats as they emerge.

Automation is often communicated as AI, and it becomes difficult for security teams to differentiate. Automation helps you to quickly make a decision you already know you will make, whereas true AI helps you make a better decision.

Key ways to differentiate true AI from automation:

- The Data Set: In automation, what you are looking for is very well-scoped. You already know what you are looking for – you are just accelerating the process with rules and signatures. True AI is dynamic. You no longer need to define activities that deserve your attention, the AI highlights and prioritizes this for you.

- Bias: When you define what you are looking for, as humans inherently we impose our biases on these decisions. We are also limited by our knowledge at that point in time – this leaves out the crucial unknown unknowns.

- Real-time: Every organization is always changing and it is important that AI takes all that data into consideration. True AI that is real time and also changes with your organization’s growth is hard to find.

Our AI Research Centre has produced numerous papers on the applications of true AI in cyber security. The Centre comprises of more than 150 members and has more than 100 patents and patents pending. Some of the featured white papers include research on Attack Path Modeling and using AI as a preventative approach in your organization.

Integrating AI Outputs with People, Process, and Technology

Integrating AI with People

We are living in the time of trust deficit, and that applies to AI as well. As humans we can be skeptical with AI, so how do we build trust for AI such that it works for us? This applies not only to the users of the technology, but the wider organization as well. Since this is the People pillar, the key factors to achieving trust in AI is through education, culture, and exposure. In a culture where people are open to learn and try new AI technologies, we will naturally build trust towards AI over time.

Integrating AI with Process

Then we should consider the integration of AI and its outputs into your workflow and playbooks. To make decisions around that, security managers need to be clear what their security priorities are, or which security gaps a particular technology is meant to fill. Regardless of whether you have an outsourced MSSP/SOC team, 50-strong in-house SOC team, or even just a 2-man team, it is about understanding your priorities and assigning the proper resources to them.

Integrating AI with Technology

Finally, there is the integration of AI with your existing technology stack. Most security teams deploy different tools and services to help them achieve different goals – whether it is a tool like SIEM, a firewall, an endpoint, or services like pentesting, or vulnerability assessment exercises. One of the biggest challenges is putting all of this information together and pulling actionable insights out of them. Integration on multiple levels is always challenging with complex technologies because they technologies can rate or interpret threats differently.

Security teams often find themselves spending the most time making sense of the output of different tools and services. For example, taking the outcomes from a pentesting report and trying to enhance SOAR configurations, or looking at SOC alerts to advise firewall configurations, or taking vulnerability assessment reports to scope third-party Incident Response teams.

These tools can have a strong mastery of large volumes of data, but eventually ownership of the knowledge should still lie with the human teams – and the way to do that is with continuous feedback and integration. It is no longer efficient to use human teams to carry out this at scale and at speed.

The Cyber AI Loop is Darktrace’s approach to cyber security. The four product families make up a key aspect of an organization’s cyber security posture. Darktrace PREVENT, DETECT, RESPOND and HEAL each feed back into a continuous, virtuous cycle, constantly strengthening each other’s abilities.

This cycle augments humans at every stage of an incident lifecycle. For example, PREVENT may alert you to a vulnerability which holds a particularly high risk potential for your organization. It provides clear mitigation advice, and while you are on this, PREVENT will feed into DETECT and RESPOND, which are immediately poised to kick in should an attack occur in the interim. Conversely, once an attack has been contained by RESPOND, it will feed information back into PREVENT which will anticipate an attacker’s likely next move. Cyber AI Loop helps you harden security a holistic way so that month on month, year on year, the organization continuously improves its defensive posture.

Explainable AI

Despite its complexity, AI needs to produce outputs that are clear and easy to understand in order to be useful. In the heat of the moment during a cyber incident, human teams need to quickly comprehend: What happened here? When did it happen? What devices are affected? What does it mean for my business? What should I deal with first?

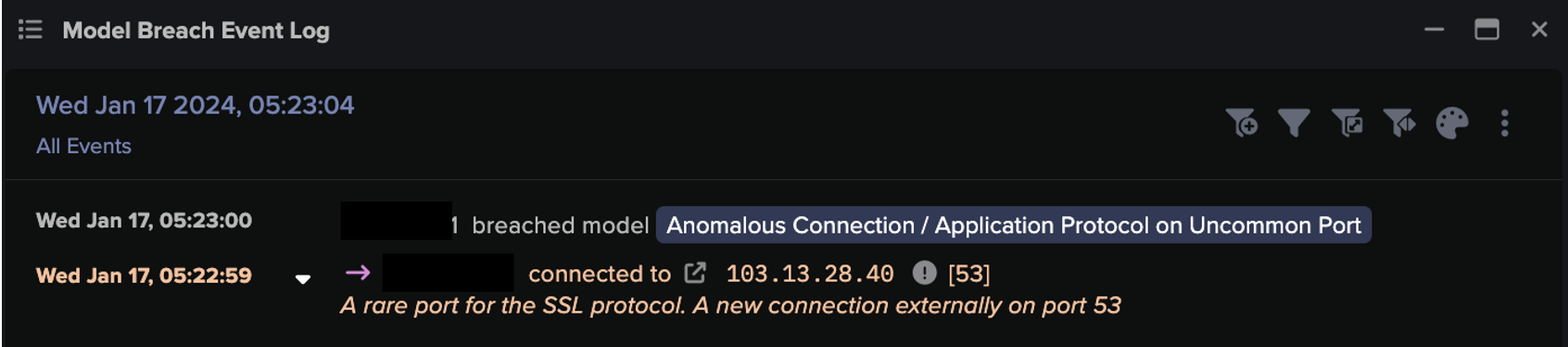

To this end, Darktrace applies another level of AI on top of its initial findings that autonomously investigates in the background, reducing a mass of individual security events to just a few overall cyber incidents worthy of human review. It generates natural-language incident reports with all the relevant information for your team to make judgements in an instant.

Cyber AI Analyst does not only take into consideration network detection but also in your endpoints, your cloud space, IoT devices and OT devices. Cyber AI Analyst also looks at your attack surface and the risks associated to triage and show you the most prioritized alerts that if unexpected would cause maximum damage to your organization. These insights are not only delivered in real time but also unique to your environment.

This also helps address another topic that frequently comes up in conversations around AI: false positives. This is of course a valid concern: what is the point of harvesting the value of AI if it means that a small team now must look at thousands of alerts? But we have to remember that while AI allows us to make more connections over the vastness of logs, its goal is not to create more work for security teams, but to augment them instead.

To ensure that your business can continue to own these AI outputs and more importantly the knowledge, Explainable AI such as that used in Darktrace’s Cyber AI Analyst is needed to interpret the findings of AI, to ensure human teams know what happened, what action (if any) the AI took, and why.

Conclusion

Every organization is different, and its security should reflect that. However, some fundamental common challenges of AI in cyber security are shared amongst all security teams, regardless of size, resources, industry vertical, and culture. Their cyber strategy and maturity levels are what sets them apart. Maturity is not defined by how many professional certifications or how many years of experience the team has. A mature team works together to solve problems. They understand that while AI is not the silver bullet, it is a powerful bullet that if used right, will autonomously harden the security of the complete digital ecosystem, while augmenting the humans tasked with defending it.